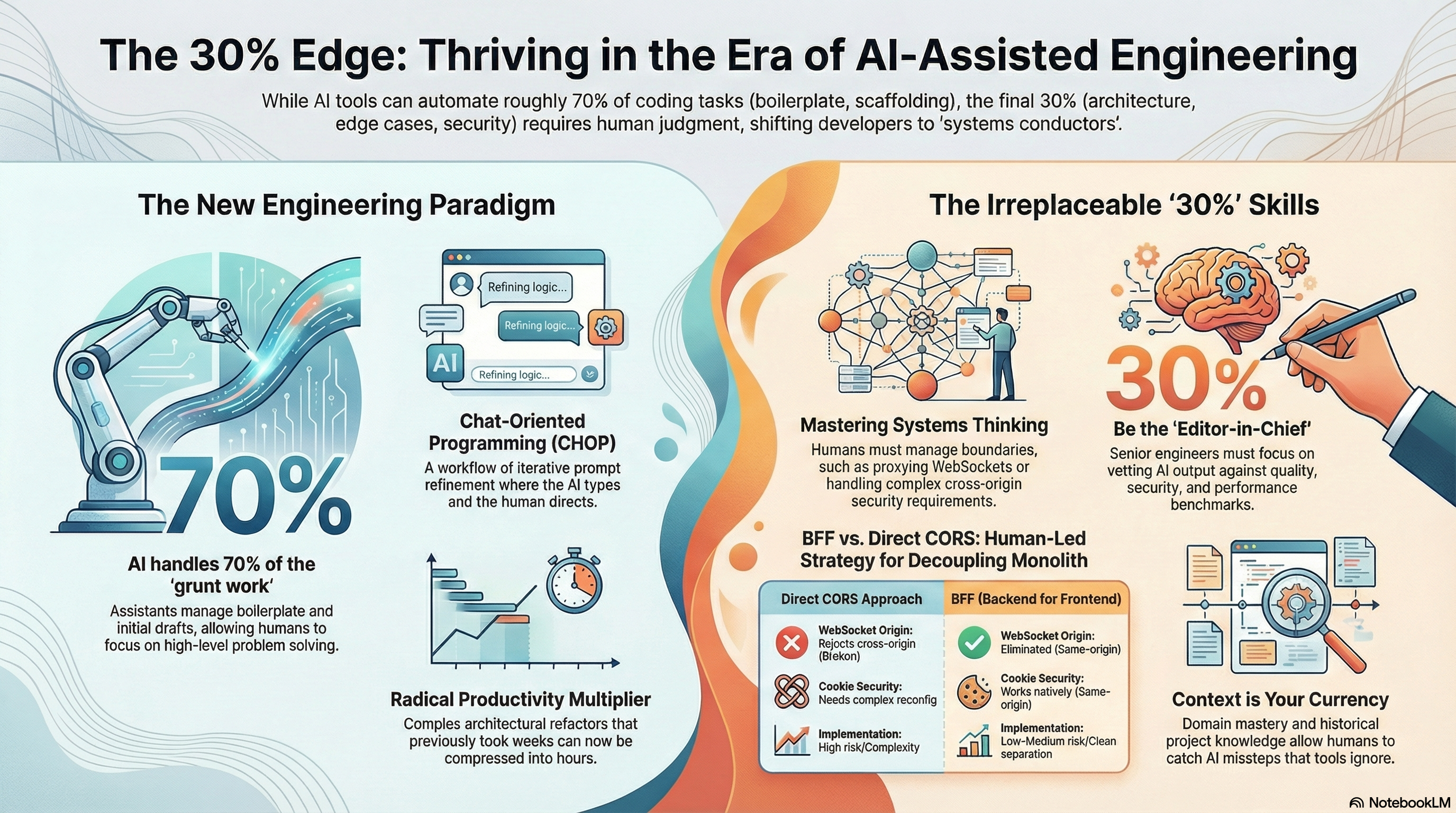

The paradigm of software development is shifting from “writing code” to “directing intent.” As AI coding assistants evolve from simple autocomplete tools to autonomous agents capable of large-scale codebase refactoring, the role of the senior architect is becoming that of an Editor-in-Chief and a Systems Conductor.

Recently, I pressure-tested this new “AI-Engineering” workflow by attempting a non-trivial architectural change: decoupling the n8n frontend from its monolithic backend using a Backend-for-Frontend (BFF) pattern. Using Claude Code in the terminal and a “Human-in-the-Loop” strategy, I navigated a complex refactor that would typically take weeks in a matter of hours.

1. The Strategy: Planning the Decoupling

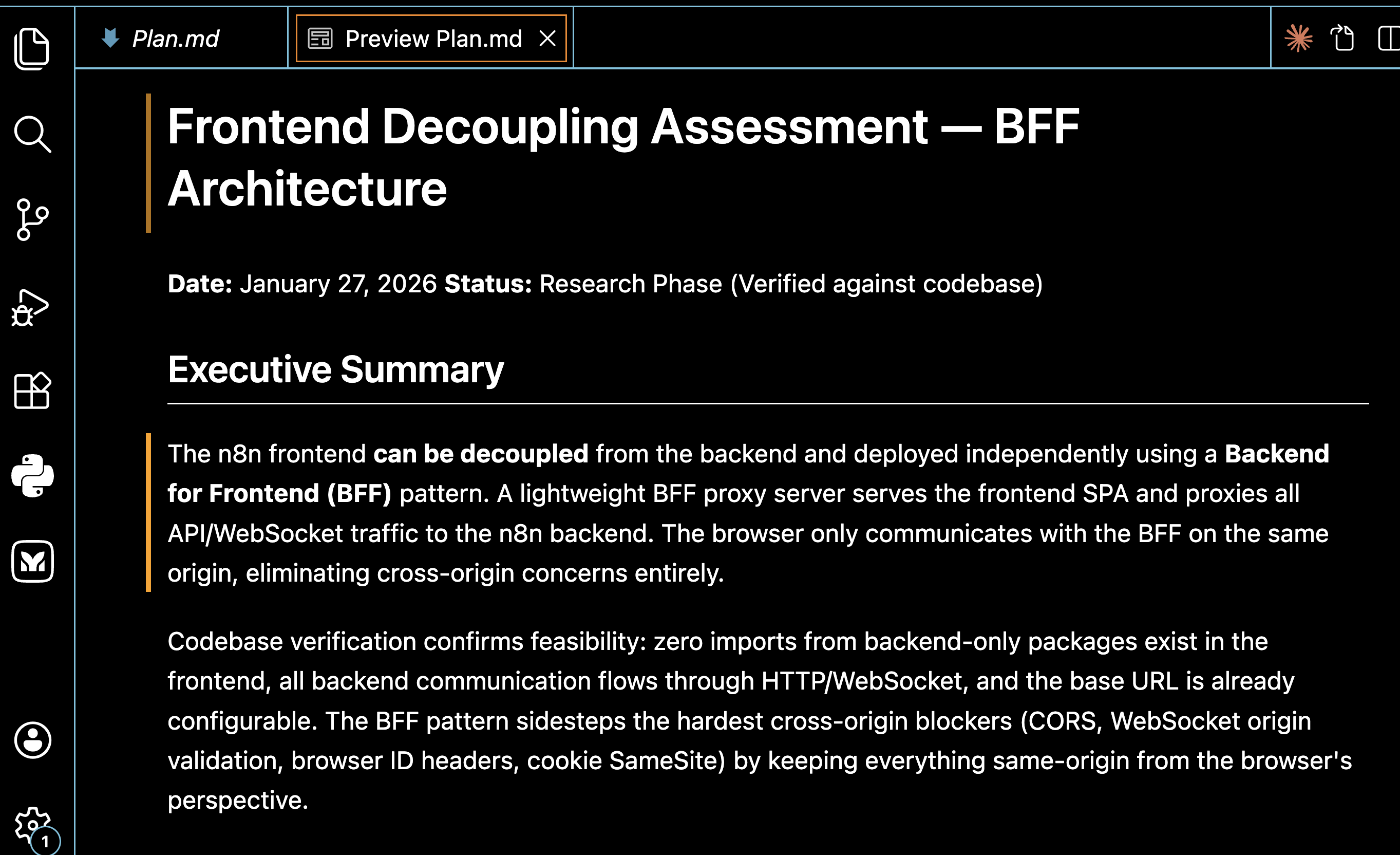

Before a single line of code was changed, the AI and I engaged in a research phase to verify the codebase against the proposed architecture. This is where the human “30%"—the irreplaceable engineering judgment—comes into play.

The Assessment Phase

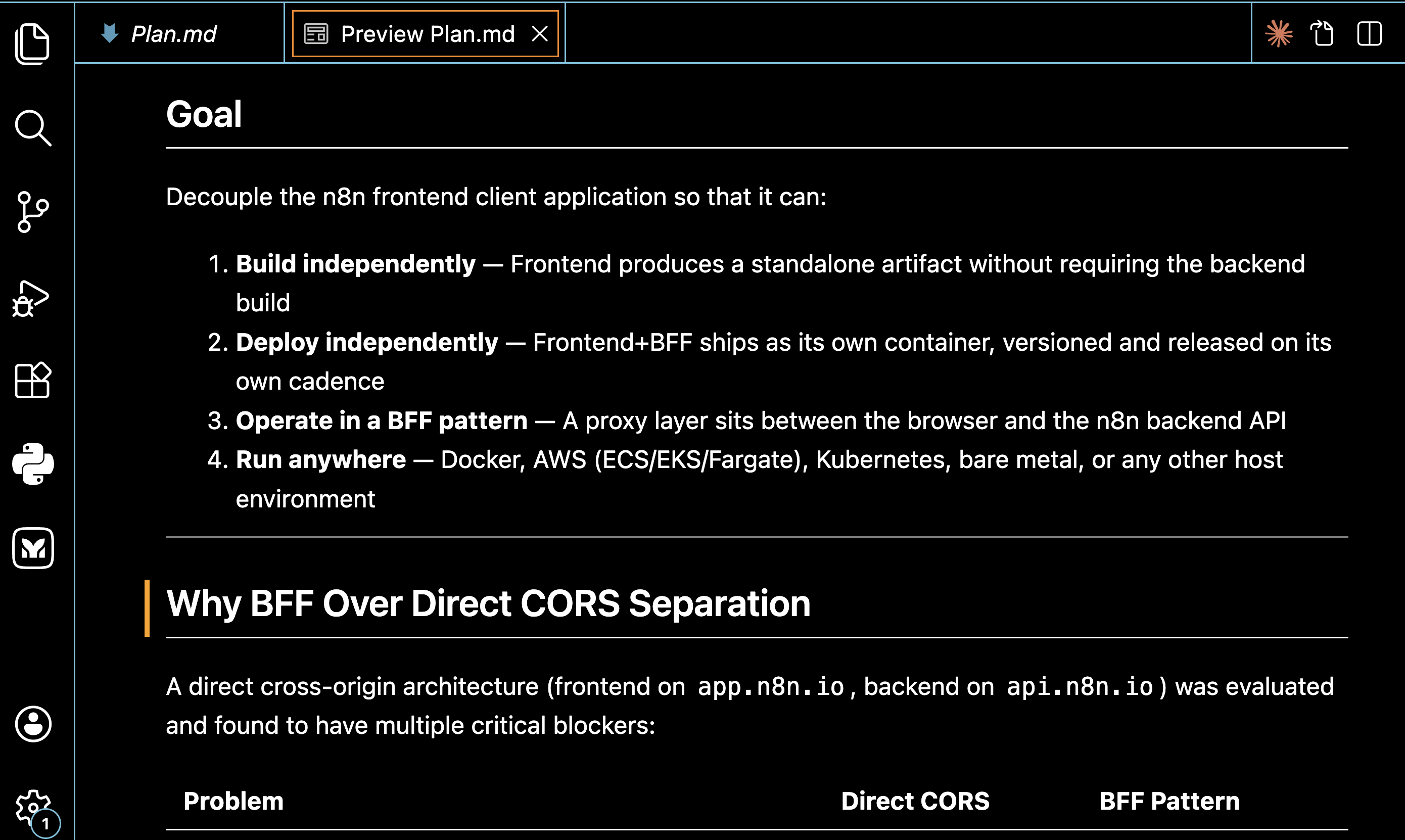

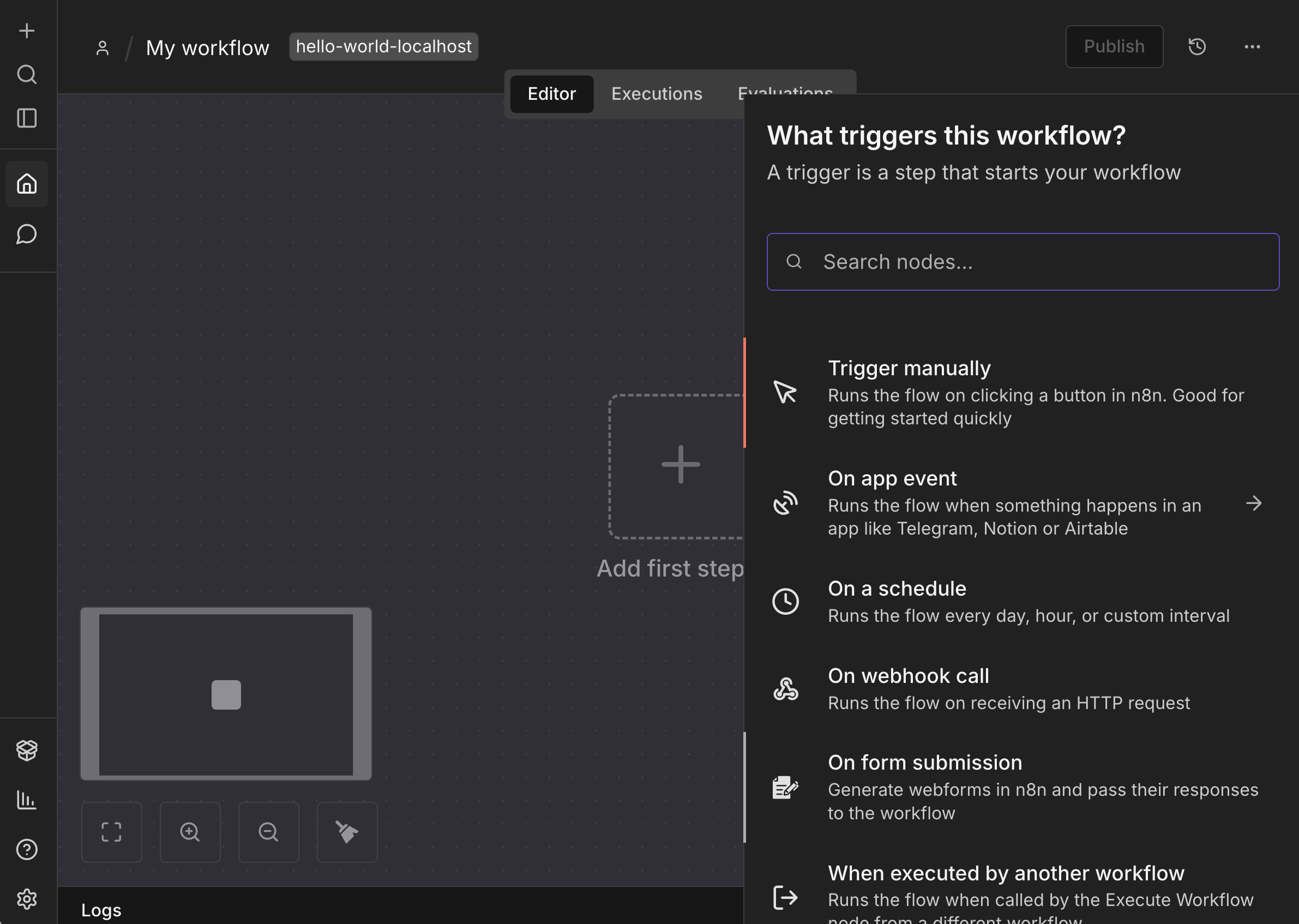

The goal was to move n8n from a monolithic deployment (where the backend serves static assets) to a decoupled model where the frontend:

- Builds independently via Vite.

- Deploys independently in its own container.

- Operates via a BFF proxy to eliminate CORS and WebSocket origin issues.

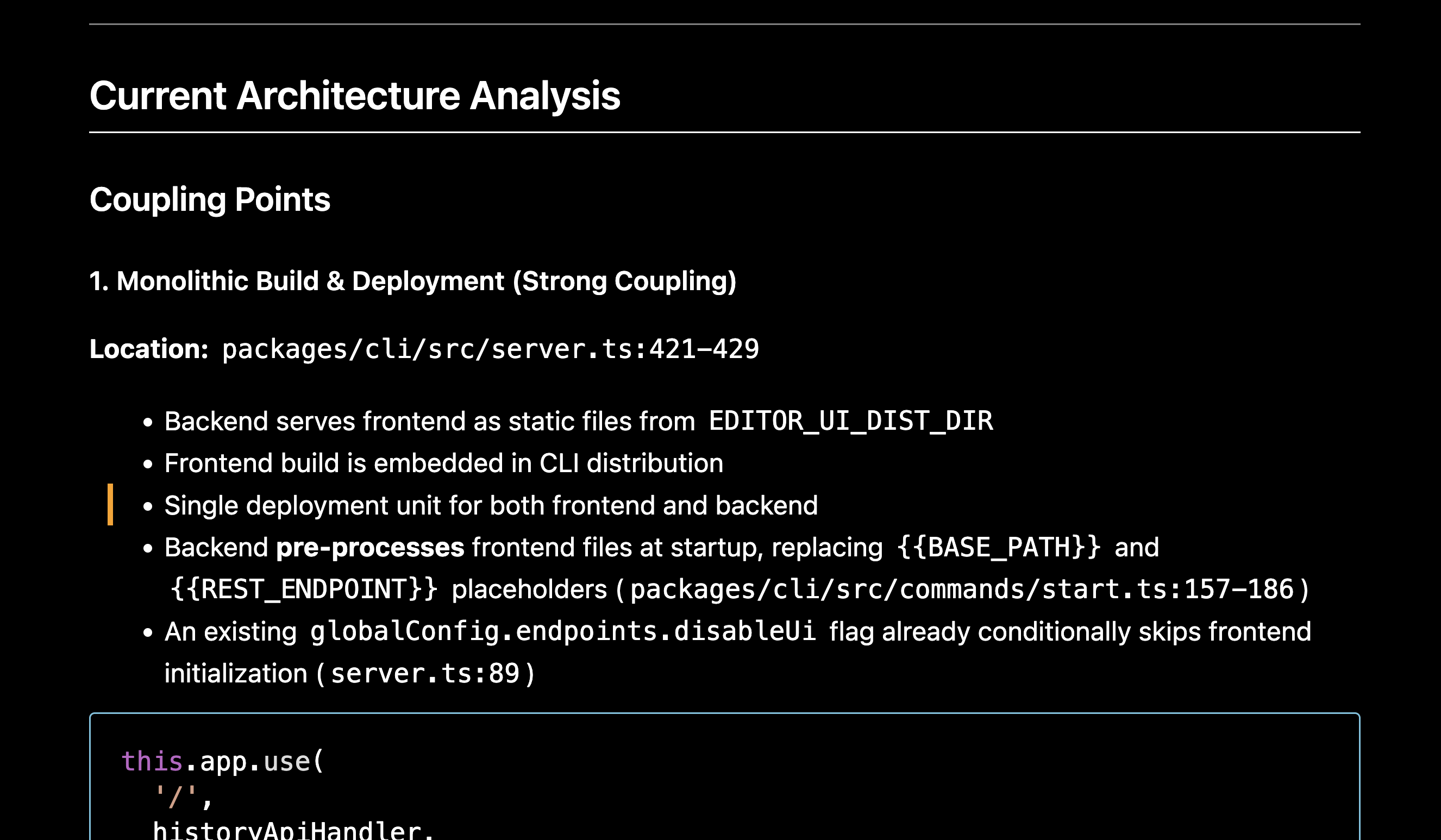

As noted in the Frontend Decoupling Assessment, we identified critical coupling points, such as the server.ts file serving static assets and the start.ts file preprocessing frontend placeholders like {{BASE_PATH}}. By using the AI to perform a comprehensive “Codebase Verification,” we confirmed that zero imports from backend-only packages existed in the frontend, making the refactor feasible.

2. The Build: Human-in-the-Loop Execution

With a validated plan, we moved into the Build Phase. This is where the “Chat-Oriented Programming” (CHOP) cycle begins—an iterative loop of prompting, reviewing, and refining.

Step-by-Step Order of Operations

The process followed a systematic sequence of technical execution:

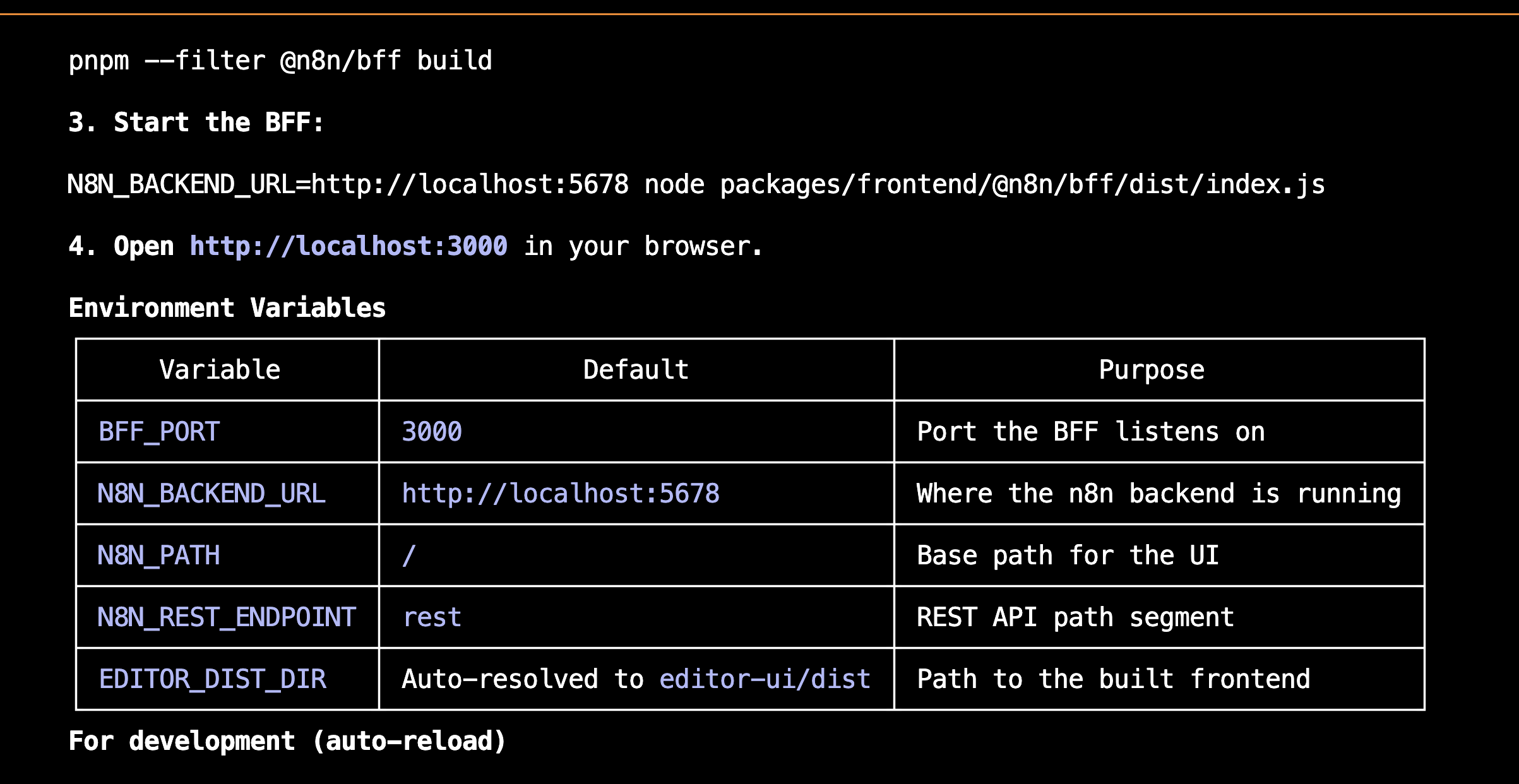

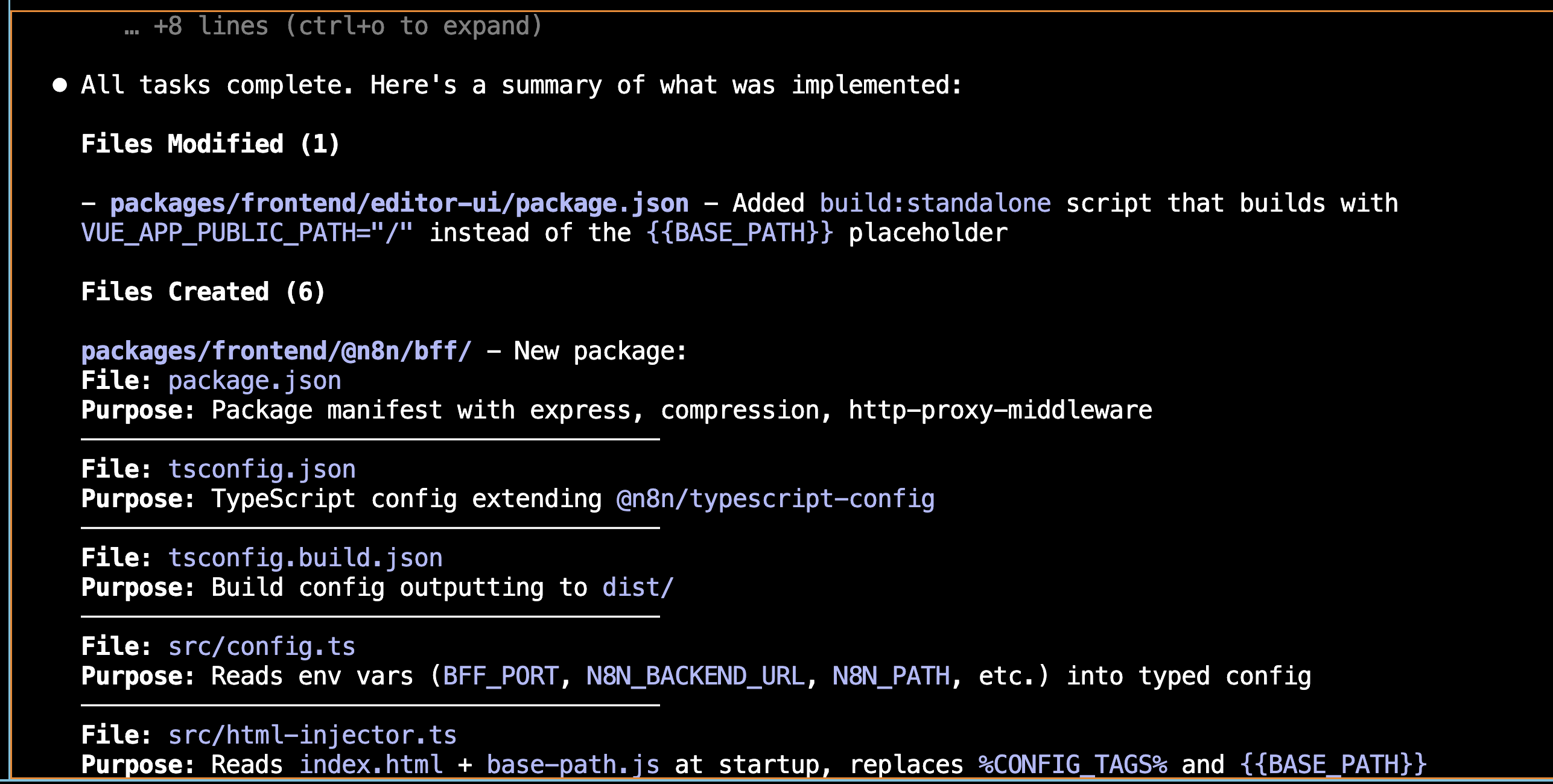

- Terminal Setup & Package Creation: We began by creating a new package at

packages/frontend/@n8n/bff/. This included the creation of thepackage.json,tsconfig.json, and the core proxy logic. - Infrastructure as Code: We implemented

src/html-injector.tsto replace the backend’s old regex-based placeholder replacement. - Environmental Parity: We established a suite of new environment variables (

BFF_PORT,N8N_BACKEND_URL) to allow the frontend to communicate with the backend across different host environments.

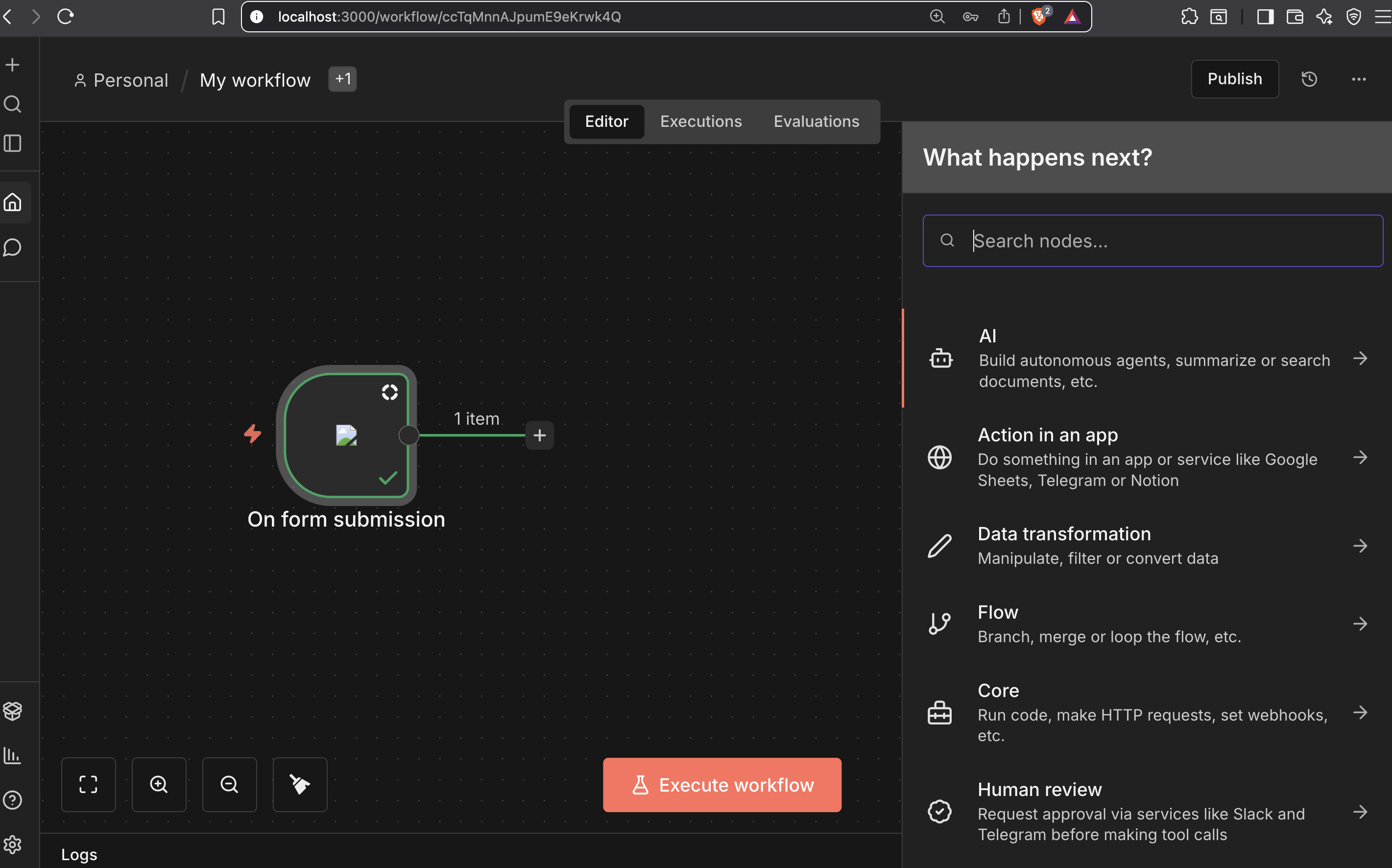

3. The Result: A Decoupled Reality

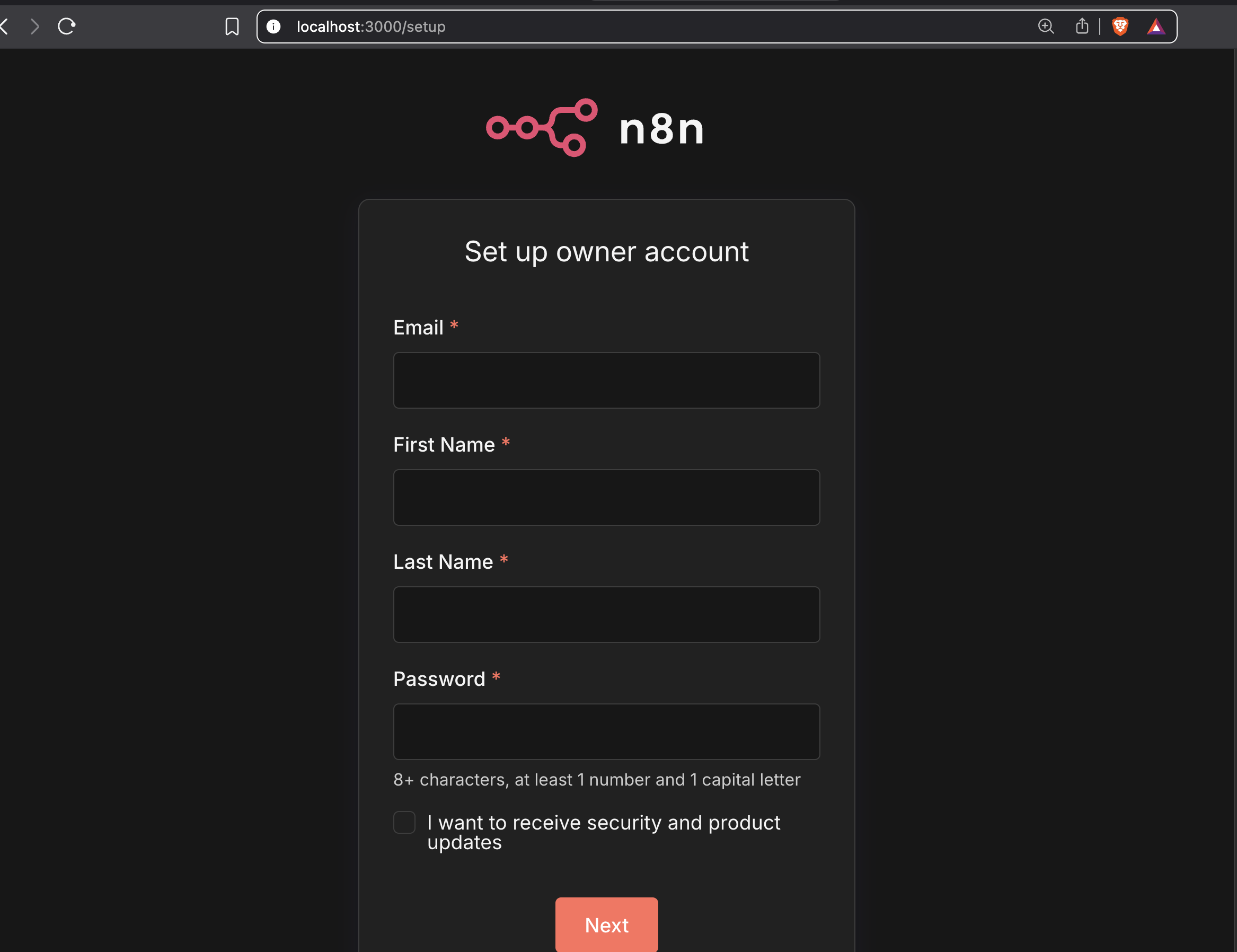

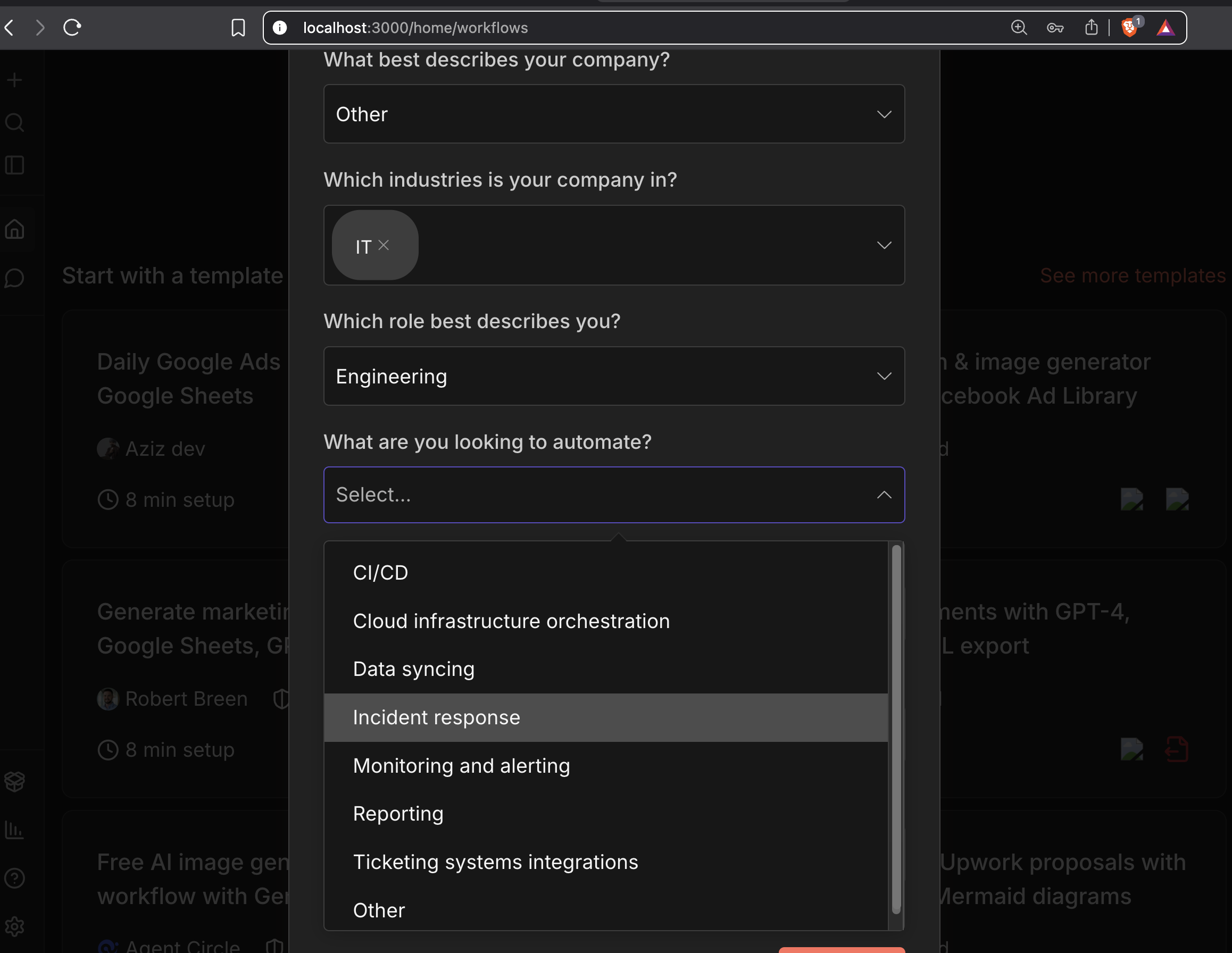

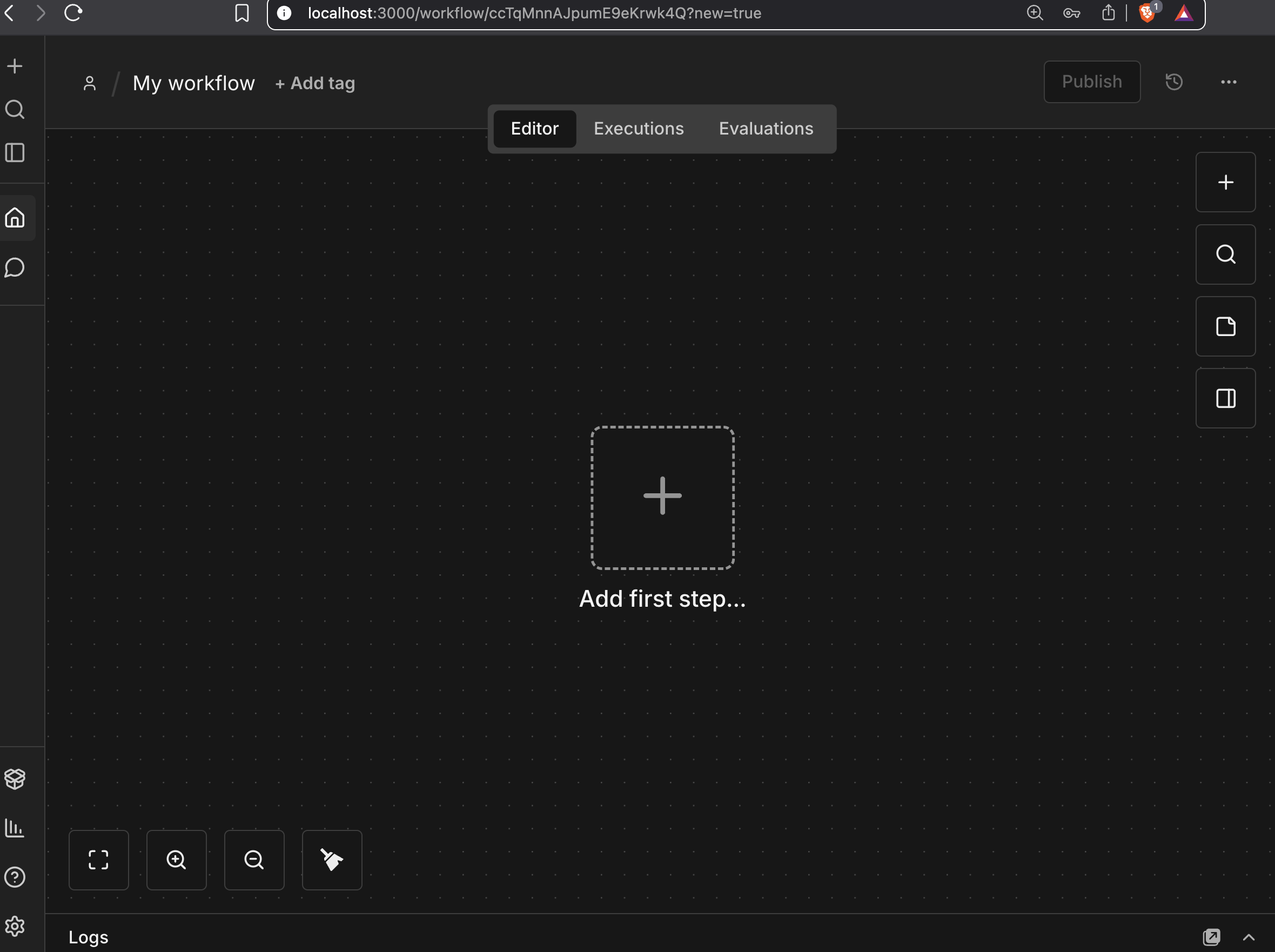

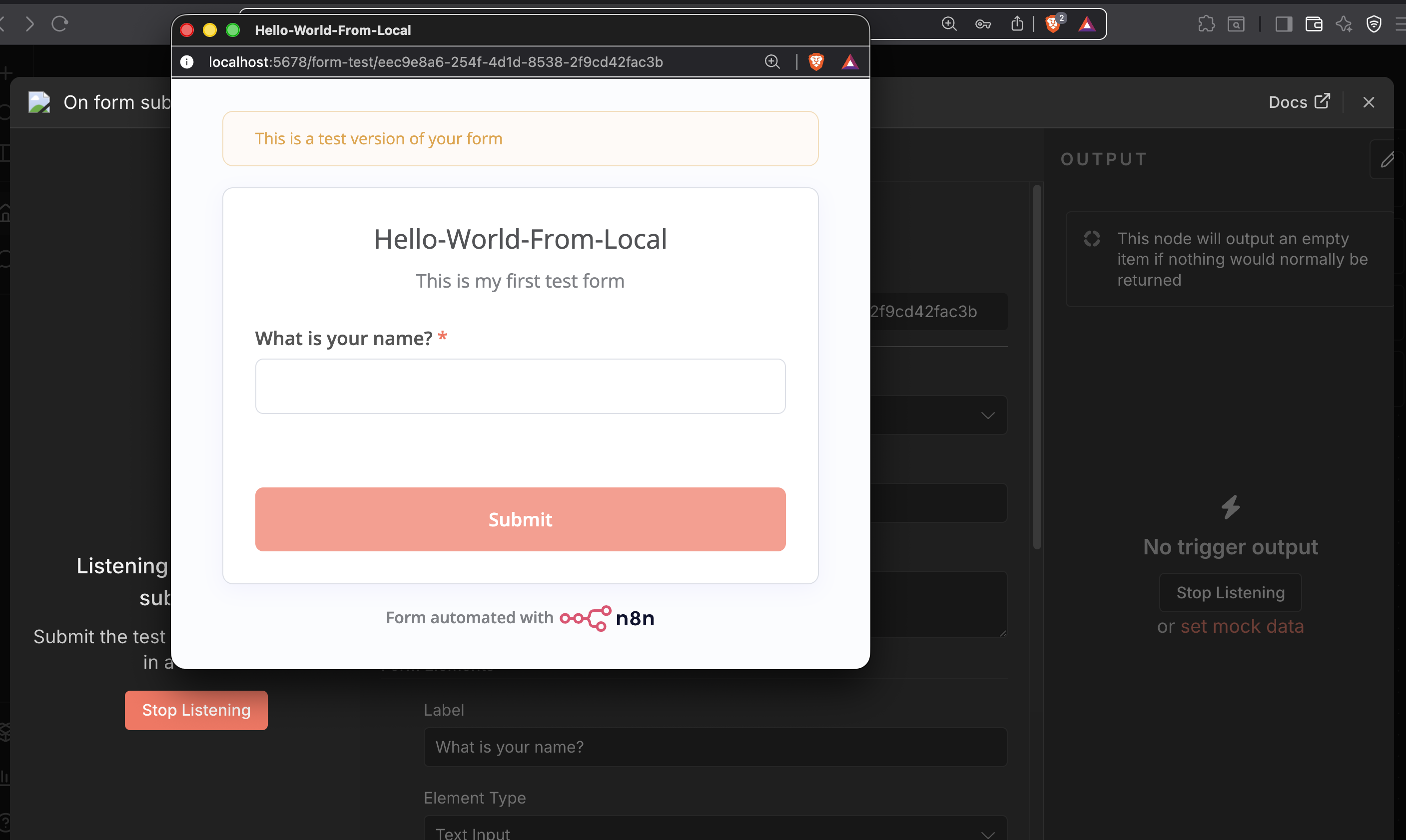

The success of the refactor is visible in the final UI. By navigating to localhost:3000 (the BFF port) instead of the default 5678, we successfully rendered the n8n setup screen and the workflow editor.

Crucially, the “Human-in-the-Loop” aspect ensured that we didn’t just “make it run”; we ensured the WebSocket push updates for workflow executions worked through the proxy—a common failure point for generic AI code generation.

4. Pros of the AI-Engineering Process

Radical Productivity Multiplier

AI handles the “70%” of grunt work—boilerplate proxy setup, type definitions, and package configuration. This allows the architect to focus on the “30%” that matters: edge cases, security, and system boundaries. What was estimated as a multi-week roadmap was compressed into a single afternoon of high-intensity direction.

Lowering the Barrier for Ambitious Refactors

Senior developers can now tackle “Wouldn’t it be nice if…?” projects that were previously too time-expensive to justify. Decoupling a core part of a popular open-source tool is a “hairy” task; AI lowers the activation energy required to attempt it.

Real-Time Tutoring and Verification

For junior and mid-level engineers, the process acts as a “power tool.” By asking the AI why it chose a specific proxy middleware or how it handled certain attributes, the developer gains domain mastery while building.

5. Cons and Risks: The “High Review Burden”

The Hallucination of Correctness

AI tends to solve the “general case” by default. In this n8n refactor, an AI might initially overlook specific backend origin validators that reject cross-origin WebSocket requests. A human architect must know that specific configurations, like changeOrigin: true, are required in the proxy config to satisfy these security checks.

The Danger of Skill Atrophy

If developers simply “copy-paste and move on,” they fail to build the mental models required to fix things when the AI fails. There is a real risk of “dumping” questionable AI code on senior reviewers, increasing the organizational “review burden.”

Dependency on Context

AI lacks “systems thinking”—it doesn’t know the project’s history or why certain legacy decisions were made unless explicitly told. If the prompt doesn’t include specific context, the AI might spend hours refactoring the wrong middleware.

6. Conclusion: The Rise of the Conductor

The n8n decoupling experiment proves that the “end of programming” is actually the “end of programming as we know it.” We are moving toward a world where the marginal cost of implementation is decreasing, making Engineering Judgment the most valuable currency in the room.

The future belongs to the “Polymath Architect”—the person who can bridge the gap between business requirements and technical execution by wielding AI as a force multiplier.

Software engineering remains a team sport; while AI can generate the code, humans must still navigate the storms, ensure security, and ultimately decide what is worth building.

Note:

Credit to Addy Osmani’s AI engineering recommendations in his recent book: Beyond Vibe Coding: From Coder to AI-Era Developer . It provides valuable insight for me to move forward with AI architecture and development. You can learn more about his book on YouTube.